When all positives are false: the emergence of an academic whodunnit

Many educators accept the idea that we need to systematically assess the performance of our students. Easily accessible writing tools complicate the practice of assessment: how do we gauge whether an assignment is original work? In the age of AI, academic integrity is at stake – or so we’re told.

On 4 April, Turnitin, a company that offers a widely used plagiarism detection system, released a preview version of their “AI Writing Detection” toolkit. The system calculates what proportion of a student assignment is assumed to be written by generative language models such as OpenAI’s wildly successful ChatGPT. Conveniently for instructors, this score integrates with other Turnitin features; it simply adds a column to a summary report that serves to flag likely instances and sources of plagiarism. The ultimate aim is to “help educators uphold academic integrity while ensuring that students are treated fairly”. The introduction of this system and its adoption by educators at our institution serves as an entry point into this critical juncture in higher education. As Susan Blum has put it, “Anthropology needs more attention to learning, and society, so consumed with schooling and learning, needs to hear from anthropologists.”

Amplifying mistrust

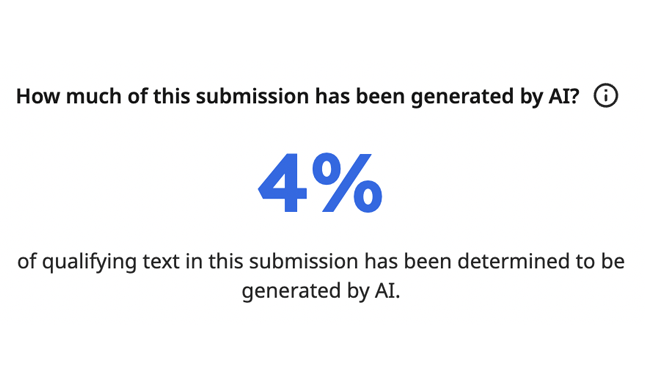

As things stand, the detection system’s reports are visible only to instructors and administrators. Students cannot access the report; stronger yet, this is pitched as a key feature. Students are thus left without recourse or opportunity for recursion – that is, being able to see what standards the system expects from them by interacting with it. Such ability for feedback would be important, not least because institutions such as the Leiden Learning & Innovation Centre and UNESCO propose experimenting with chat bots. (Turnitin even published a blog to help “develop AI literacy amongst educators and students” in order to “enchance teaching workflows” and “empower higher-level thinking” among students). There are many ways in which generative techniques might be used in an anthropological assignment, including exploration of technical features and encoded biases. Turnitin’s system translates all such uses into a percentage of the “qualifying text” that “has been determined to be generated by AI”.

At Leiden University, instructors were not consulted prior to the introduction of this feature. Despite being a preview release, there is “no ability to turn off AI detection”. Without the possibility to opt out, instructors suddenly found an additional number next to their students’ assignments without any guidance about what this number means or what consequences should be derived from it. Quickly this number joined other evaluation metrics that academics regularly encounter, such as the h-index and journal impact factors, that lay dubious claims to measuring outcomes. Like with Niels Bohr’s horseshoe, instructors don’t have to believe in these metrics for them to work. And work they did: within just two weeks, instructors and students found themselves pitted against each other as accuser and accused, with all the stress and strain that entails on either side. Framing the system as something “created for educators with educators”, we notice that services like these further amplify mistrust between educators and students.

Ideological adjustment

While easily accessible products leveraging large language models are new entrants in 2023, the introduction of the AI Writing Detection tool by Leiden University and other institutions fits by now established repertoires across education in the Netherlands. In the mid-1970s, anthropologist Theo Jansen published an ethnography on practices of control and authority in the classroom. Conducting research at four ideologically distinct elementary schools in the Netherlands, he noticed a tendency among many teachers to encourage or enforce docile and obedient behaviour. From capitalist modes of competition in the classroom to relationships of dependency between teachers and students, Jansen unpacked how education became inflected by dominant cultural tendencies: individualism, productivity, hierarchy, order.

This mode of schooling has since become entrenched, and it has also made significant inroads in higher education. The Humboldtian ideal of an education based on the prevailing principles of “solitude and freedom” has its own problems, but recalling it from time to time can help us see just how far the educational mission of our institutions has drifted. Self-development has widely been supplanted by an unending string of assessments and other occasions for pleasing instructors and receiving grades.

For these assessments to be meaningful – not in the sense of being a worthwhile use of students’ time, but in the sense of measuring individual achievement – institutions need to spend a lot of time worrying about standards. Does the work students are being assessed for truly reflect their individual effort? Does this effort equate to the allocated ECTS points? Or, in short, how can we continue providing the kind of education we have grown to appreciate (and think students need to succeed in their professional lives)?

Generative plagiarism anxiety

Under the umbrella of academic integrity, anxieties about plagiarism and other forms of fraudulent behaviour, often assumed to be rampant, come to dictate policy and mediate relations between educators and students. Rather than working together to create knowledge, proliferating mistrust pits instructors and students against each other as antagonists in an elaborate academic whodunnit. In 2005, the Dutch State Secretary for Higher Education and Science, on a mission to make Dutch higher education more internationally competitive, demanded strict punishments for students found to have submitted plagiarised work. (That State Secretary, Mark Rutte, went on to become the country’s longest-serving prime minister, in office to this day). In 2007, Leiden University first provided an automated plagiarism detector called Ephorus, which was later acquired by Iparadigms, makers of Turnitin. Use of Turnitin has since become standard practice among instructors not just at our own university, but at thousands of institutions around the world.

Integrated with learning-management systems like Blackboard, Canvas, and Brightspace, enabling Turnitin is often just one click away when instructors create an assignment submission form. Every paper submitted through a Turnitin assignment becomes part of the company’s reference corpus, used to highlight offending material with the help of proprietary text-analysis techniques. This means that students have to agree to a user agreement that grants Turnitin a “non-exclusive, royalty-free, perpetual, worldwide, irrevocable license” to their work. In so doing, their work becomes part of the ever-growing backlog of student material used to inspect incoming submissions: by uploading a text you’re helping to train a monetised model used for inspecting the work of your peers across the globe.

Though use of plagiarism detection is now widely normalised, it is worth recalling that in the early days it was often subject to spirited debate. As recently as 2018, the University of Amsterdam witnessed lively debate about the use of Turnitin after Hans de Zwart, a philosophy graduate student who was then also director of the advocacy organisation Bits of Freedom, objected to uploading his work to the system due to the “absurd” terms he would have to agree to. He was joined by instructors who deemed Turnitin unethical.

AI detection as political artifact

The introduction of the AI Writing Detection tool was announced as fait accompli, a mere technical feature being added to a product, and a convenient one at that. However, as we have seen in the weeks since its introduction, it is not a neutral tool; instead, as Langdon Winner famously put it, its introduction can be likened to a legislative act that makes and transforms institutions. This legislative act is shrouded in the bland language of product features and technical specifications, so its primary addressees – educators – find fault with themselves for being inept instead of faulting an ill-advised new policy.

We can only speculate how much self-doubt, how many gut-wrenching moments, how many unnecessary emails and pointless confrontations this botched policy has already caused. So far, it seems unlikely that anyone will be held accountable for this, since we lack a forum and a language in which to critically address AI writing detection services. In recent years Leiden University has seen successful protests occasioned by CameraGate – that is, the clandestine deployment of cameras euphemistically referred to as “people counters” in all classrooms – but other surveillance systems have often escaped scrutiny. Tools for ensuring academic integrity are simply above critique, because critics run the risk of being seen as defenders of fraud and cheating. It is, as bell hooks writes, “so difficult to change existing structures [in education] because the habit of repression is the norm”.

An education beyond credentialism

Despite the difficulties inherent in doing so, we think this moment demonstrates the value in asking what (else) a pedagogy in the age of generative AI could be. Anthropological and sociological perspectives are particularly apt to formulate such a pedagogy: we can ask whether the tools we’ve grown to cherish or despise truly serve the work of education rather than the disciplining and stratifying functions of the university. Or, to take up the suggestion by Rotterdam-based collective Varia: what can we as anthropologists do to break away from technological maximalism and move towards “minimum viable learning”?

Image Credit: Two medicine vendors, their wives, cats and dogs arguing about the merits of their antiscorbutic pills. Etching by J. Bretherton after H.W. Bunbury, 1774. Wellcome Collection. Public Domain Mark.

0 Comments

Add a comment