How robots took over our lives and we failed to notice

Robots taking over the human race: an apocalyptic future or everyday reality? From dystopian science fiction imagery to reflections on algorithm culture.

The dystopian vision of a future in which robots take over the human race is as old as it is powerful—and is always in ‘the future’. Or is it? Could it be that we already live in such a world, but the robotic domination is less spectacular and much more mundane?

The spectacular robotic takeover

The imagination of robots taking over humankind is often based on images of large, powerful, and animated entities taking power, typically through violent and dramatic struggle. Just think, for instance, of early examples such as Leonardo Da Vinci’s knight robot of late 15th century, Mary Shelley’s famous Frankenstein (19th century), or the more recent versions of Terminators and Transformers. But maybe these images blind us to the really significant forms of relationships that humans already have with technology? Could it be that we are already living in a world run by robots, and that they are governing our lives on a daily basis? Instead of humanoid robots with antennas, or huge, screeching transformers from the outer space, the really significant takeover could be much more mundane, boring, and way less spectacular.

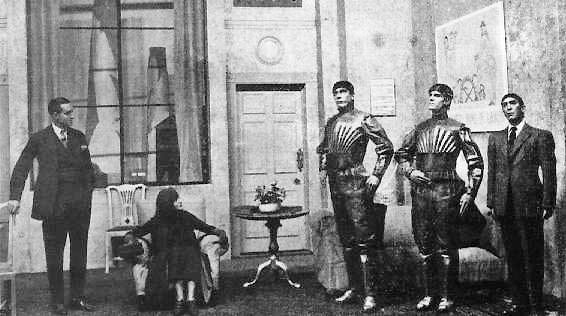

R.U.R., a 1920 science fiction play by the Czech writer Karel Čapek

Algorithm Culture

In recent years scholars in the social sciences are becoming concerned with what is often called ‘Algorithm Culture’—the idea that our lives are increasingly regulated and shaped by complex computer systems and algorithmic equations that classify, sort, and channel user behaviours.

The easiest way to think about an algorithm is as a device that draws conclusions: ‘if x, then y’. For example: ‘If you watch a lot of movies classified as “historical drama”, the new episodes of Game of Thrones are likely to appeal to you.’ And indeed, algorithms shape a lot of routine choices and actions we make on daily basis—what to read or listen to, or which route to take following Google Maps. When you look for a movie on Netflix, a book on Amazon, or a song on Spotify, the choices presented to you are calculated by algorithms based on particular assumptions about the world and human behaviour. To a large degree these seem like welcome conveniences.

‘Binary’, by bykst (Pixabay)

The mundane rule of robots

Algorithmic governance doesn’t stop with entertainment though. Instead, one could say that algorithms play a role in every aspect of life that is subject to automation. The uses of algorithms range from regulating traffic, detecting plagiarism, identifying possible threats in public spaces, or dynamic pricing of airline tickets, to matching people in online dating sites, estimating your creditworthiness, or determining which of your friends’ updates on Facebook you see—such algorithms deeply shape the ways in which we understand and interact with the world.

Most of us, however, have no idea about the various ways in which we are classified, on what basis, and what kind of consequences this has for our lives. While being identified as a ‘fan of indie movies with strong female leads’ may seem to have little significance, being labelled as a ‘potential terrorist’ and having your house searched because you happened to look up ‘pressure cooker’ and ‘backpack’ online in the aftermath of Boston bombings puts it all in a rather different light. Harvard professor of law Frank Pasquale calls this the ‘Black Box Society’ and argues that while for many the algorithmic automation is a matter of ‘convenience', it is those who are already most vulnerable in society who suffer most from such opaque classification practices.

Robots in and out of control

While much of the concern about algorithmic rule stems from the recognition that the majority of such algorithms are designed primarily to serve the economic interests of companies rather than users, it seems equally clear that algorithmic governance doesn’t have a single, overarching ideology. When many algorithms interact, the kind of effects they create cannot be fully predicted or understood—even by the people who develop them. The workings of high speed trading and the infamous Flash Crash provide a good example. In 2010 the U.S. stock market collapsed and recovered in a matter of 36 minutes, in the process wiping out ‘nearly $1 trillion in market value’ and leaving all involved in puzzlement about what had happened. On a smaller scale we can see similar volatility when we stumble upon a weirdly priced book on Amazon—as happened to Michael Eisen, a biology graduate student, in 2011. He wanted to obtain the biology classic The Making of a Fly by Peter Lawrence and found out it cost a mere $23,698,655.93 (plus $3.99 shipping).

Scene from the motion picture ‘I, Robot’ (Alex Proyas, 20th Century Fox, 2004)

How robots took over our lives and we failed to notice?

By comparing the rather abstract notion of an algorithm with the culturally charged image of a robot in this blog post, I hoped to provoke readers enough to reflect on their relationship with the automated systems. Systems that make many of our daily decisions and define available options. Together with my colleague Hanna Schraffenberger, I am interested in exploring how can we better understand the various ways in which algorithms play role in our lives and how we as scholars can study this phenomenon.

Get involved!

During the 14th EASA2016 biennial conference in Milan this summer Hanna and I will lead an interactive hands-on laboratory where together with the other participants we’ll try to understand some basic principles of algorithmic culture and develop study materials that everyone can use to explore this topic. If you’re attending, please join us Thursday 21 July, at 09:00 room 2. If you can’t join, but still want to get in touch with us, visit our website www.livingwithalgorithms.com.

1 Comment

"Algorithm culture" is an important concept that connects data science with changes in everyday life. It can be seen as analogous to "audit culture", which charted in the early 2000s the ways in which a specific quantative monitoring practice from accounting spread out into very widespread forms of social organisation, even including qualitative forms (such as ethical codes). It should be one of the core concepts in a new line of research usually designated as the ethnography of infrastructure, which tells us about the often invisble ways in which absent agents and their classifications determine what we do (as outlined, for example, by the work of Geoffrey Bowker and Leigh Star). Leiden anthropologists are keen to collaborate with colleagues in setting up such research lines.

Add a comment